Hadoop是一个由Apache基金会所开发的分布式系统基础架构。 用户可以在不了解分布式底层细节的情况下,开发分布式程序。充分利用集群的威力进行高速运算和存储。 Hadoop实现了一个分布式文件系统(Hadoop Distributed File System),简称HDFS。HDFS有高容错性的特点,并且设计用来部署在低廉的(low-cost)硬件上;而且它提供高吞吐量(high throughput)来访问应用程序的数据,适合那些有着超大数据集(large data set)的应用程序。HDFS放宽了(relax)POSIX的要求,可以以流的形式访问(streaming access)文件系统中的数据。 Hadoop的框架最核心的设计就是:HDFS和MapReduce。HDFS为海量的数据提供了存储,则MapReduce为海量的数据提供了计算。

namenode 192.168.31.243 datenode 192.168.31.165

实验环境 centos6_x64

实验软件 jdk-6u31-linux-i586.bin hadoop-1.0.0.tar.gz

软件安装 yum install -y rsync* openssh* yum install -y ld-linux.so.2 groupadd hadoop useradd hadoop -g hadoop mkdir /usr/local/hadoop mkdir -p /usr/local/java service iptables stop

ssh-keygen -t rsa 192.168.31.243配置 (192.168.31.165配置相同) Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub.

scp -r /root/.ssh/id_rsa.pub 192.168.31.165:/root/.ssh/authorized_keys scp -r /root/.ssh/id_rsa.pub 192.168.31.243:/root/.ssh/authorized_keys scp -r jdk-6u31-linux-i586.bin hadoop-1.0.0.tar.gz 192.168.31.165:/root/

mv jdk-6u31-linux-i586.bin /usr/local/java/ cd /usr/local/java/ chmod +x jdk-6u31-linux-i586.bin ./jdk-6u31-linux-i586.bin

vim /etc/profile 最后一行追加配置 # set java environment export JAVA_HOME=/usr/local/java/jdk1.6.0_31 export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin # set hadoop path export HADOOP_HOME=/usr/local/hadoop export PATH=$PATH:$HADOOP_HOME/bin source /etc/profile

java -version java version "1.6.0_31" Java(TM) SE Runtime Environment (build 1.6.0_31-b04) Java HotSpot(TM) Client VM (build 20.6-b01, mixed mode, sharing)

tar zxvf hadoop-1.0.0.tar.gz mv hadoop-1.0.0 /usr/local/hadoop chown -R hadoop:hadoop /usr/local/hadoop ll /usr/local/hadoop/ drwxr-xr-x 14 hadoop hadoop 4096 Dec 16 2011 hadoop-1.0.0

cp /usr/local/hadoop/conf/hadoop-env.sh /usr/local/hadoop/conf/hadoop-env.sh.bak vim /usr/local/hadoop/conf/hadoop-env.sh # export JAVA_HOME=/usr/lib/j2sdk1.5-sun export JAVA_HOME=/usr/local/java/jdk1.6.0_31 修改为

cd /usr/local/hadoop/conf cp core-site.xml hdfs-site.xml mapred-site.xml core-site.xml 这几个文件都备份一下

vim core-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> 红色为需要修改的地方 <property> <name>hadoop.tmp.dir</name> <value>/usr/local/hadoop/tmp</value> <description>A base for other temporary directories.</description> </property> <!-- file system properties --> <property> <name>fs.default.name</name> <value>hdfs://192.168.31.243:9000</value> </property> </configuration>

vim hdfs-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication</name> <value>3</value> </property> </configuration>

vim mapred-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name> mapred.job.tracker</name> <value>http://192.168.21.243:9001</value> </property> </configuration>

cp masters masters.bak vim masters localhost 192.168.31.243

cp slave slave.bak 192.168.31.165配置 vim /usr/local/hadoop/conf/slaves localhost 192.168.31.165

scp -r core-site.xml hdfs-site.xml mapred-site.xml 192.168.31.165:/usr/local/hadoop/conf/ /usr/local/hadoop/bin/hadoop namenode -format Warning: $HADOOP_HOME is deprecated. 16/09/21 22:51:13 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = java.net.UnknownHostException: centos6: centos6 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 1.0.0 STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0 -r 1214675; compiled by 'hortonfo' on Thu Dec 15 16:36:35 UTC 2011 ************************************************************/ 16/09/21 22:51:14 INFO util.GSet: VM type = 32-bit 16/09/21 22:51:14 INFO util.GSet: 2% max memory = 19.33375 MB 16/09/21 22:51:14 INFO util.GSet: capacity = 2^22 = 4194304 entries 16/09/21 22:51:14 INFO util.GSet: recommended=4194304, actual=4194304 16/09/21 22:51:14 INFO namenode.FSNamesystem: fsOwner=root 16/09/21 22:51:14 INFO namenode.FSNamesystem: supergroup=supergroup 16/09/21 22:51:14 INFO namenode.FSNamesystem: isPermissionEnabled=true 16/09/21 22:51:14 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 16/09/21 22:51:14 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 16/09/21 22:51:14 INFO namenode.NameNode: Caching file names occuring more than 10 times 16/09/21 22:51:14 INFO common.Storage: Image file of size 110 saved in 0 seconds. 16/09/21 22:51:14 INFO common.Storage: Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted. 16/09/21 22:51:14 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at java.net.UnknownHostException: centos6: centos6 ************************************************************/

/usr/local/hadoop/bin/start-all.sh

Warning: $HADOOP_HOME is deprecated. starting namenode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-namenode-centos6.out The authenticity of host 'localhost (::1)' can't be established. RSA key fingerprint is 81:d9:c6:54:a9:99:27:c0:f7:5f:c3:15:d5:84:a0:99. Are you sure you want to continue connecting (yes/no)? yes localhost: Warning: Permanently added 'localhost' (RSA) to the list of known hosts. root@localhost's password: localhost: starting datanode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-datanode-centos6.out The authenticity of host '192.168.31.243 (192.168.31.243)' can't be established. RSA key fingerprint is 81:d9:c6:54:a9:99:27:c0:f7:5f:c3:15:d5:84:a0:99. Are you sure you want to continue connecting (yes/no)? yes 192.168.31.243: Warning: Permanently added '192.168.31.243' (RSA) to the list of known hosts. root@192.168.31.243's password: 192.168.31.243: starting secondarynamenode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-secondarynamenode-centos6.out starting jobtracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-jobtracker-centos6.out root@localhost's password: localhost: starting tasktracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-tasktracker-centos6.out

ll /usr/local/hadoop/tmp/ drwxr-xr-x 5 root root 4096 Sep 21 22:53 dfs drwxr-xr-x 3 root root 4096 Sep 21 22:53 mapred 看到这两项证明没有错误

jps 3237 SecondaryNameNode 3011 NameNode 3467 Jps

netstat -tuplna | grep 500 tcp 0 0 :::50070 :::* LISTEN 3011/java tcp 0 0 :::50090 :::* LISTEN 3237/java

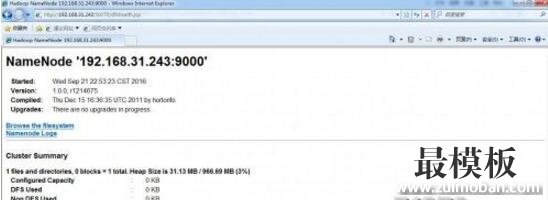

http://192.168.31.243:50070/dfshealth.jsp

|

centos6安装hadoop

时间:2016-10-20 14:08来源:未知 作者:最模板编辑 点击:次

Hadoop是一个由Apache基金会所开发的分布式系统基础架构。 用户可以在不了解分布式底层细节的情况下,开发分布式程序。充分利用集群的威力进行高速运算和存储。 Hadoop实现了一个分

顶一下

(0)

0%

踩一下

(0)

0%

------分隔线----------------------------

- 上一篇:CentOS7配置默认启动为图形界面

- 下一篇:Centos 搭建FTP服务器

- 热点内容

-

- Linux解压缩 zip,tar,tar.gz,tar.bz2

zip 可能是目前使用得最多的文档压缩格式。它最大的优点就是在...

- Linux服务器常用netstat命令

使用过一段时间VPS、服务器的用户,比如老蒋遇到以前没有看到...

- 服务器php-fpm无法开启session解决方法

php-fpm 无法开启session解决方案 php-fpm session缓存的开启比较严格...

- Linux编译安装Ldap扩展

Ldap是轻量目录访问协议,英文全称是Lightweight Directory Access Pro...

- centos使用nginx反向代理实现负载均衡

nginx做负载均衡的优点: 1、工作在网络的7层之上,可以针对h...

- Linux解压缩 zip,tar,tar.gz,tar.bz2

- 随机模板

-

-

英文内衣外贸商城|ecshop英

人气:448

英文内衣外贸商城|ecshop英

人气:448

-

ecshop仿妙乐乐母婴用品商

人气:665

ecshop仿妙乐乐母婴用品商

人气:665

-

英文电子数码商城|ecshop电

人气:870

英文电子数码商城|ecshop电

人气:870

-

织梦dedecms金融投资管理公

人气:2199

织梦dedecms金融投资管理公

人气:2199

-

ecshop仿蘑菇街2016最新模板

人气:711

ecshop仿蘑菇街2016最新模板

人气:711

-

ecshop仿唯伊网模板|化妆品

人气:1710

ecshop仿唯伊网模板|化妆品

人气:1710

-